As artificial intelligence capabilities continue expanding at a blistering pace, businesses are rapidly exploring how to apply AI tools in the workplace.

While some optimistically view AI as a game-changing productivity multiplier, others fear the technology could make many jobs redundant.

As the line between human and machine blurs, the stakes are high for companies to get their AI strategies right.

I’m taking part in US edtech startup Section’s “mini MBA” for AI business strategy as part of a project run by Spark. The paragraphs above were written by an AI tool based off my notes, and then edited by me.

In fact, most of the top half of this article is, though the tool failed sorely with the second part, meaning I had to do actual work.

I have then run the article through a tool we’ve developed at NZME to do a basic edit of content – things like putting the article into house style, fixing spelling mistakes and ensuring te reo Māori macrons are in place.

In the third week of the course, we heard from entrepreneur Dan Slagen, from tomorrow.io, a startup that translates weather forecasts into actionable insights for companies so they can act to protect their business interests.

Slagen is an AI optimist. He wants to use AI to make his staff increasingly productive.

“I want my staff to be one plus one equals three employees who are able to do a lot more.”

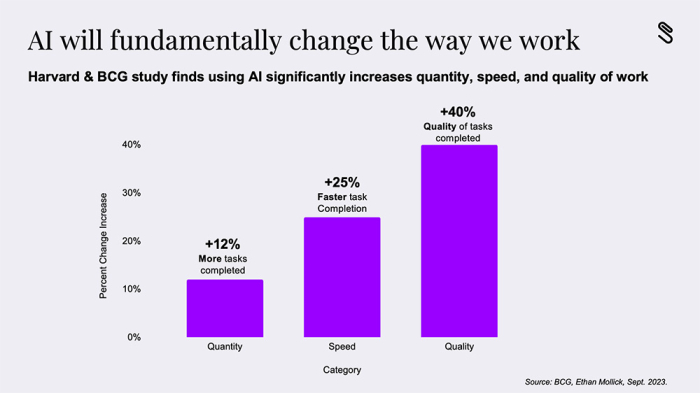

Studies from Harvard Business School and BCG show AI-assisted workers can see a 40 per cent increase in quality of work output as well as improved speed and quantity.

Slagen is rolling out a comprehensive AI strategy over the next year, with specific directives for each department to use generative AI, analysis tools and other AI capabilities to drive productivity and efficiency.

To do this, he identifies an objective and then breaks it down into up to six steps.

For example, with an AI-assisted employee onboarding flow, he would:

- Identify objectives.

- Develop timeline.

- Build materials.

- Implement.

- Refine based on feedback.

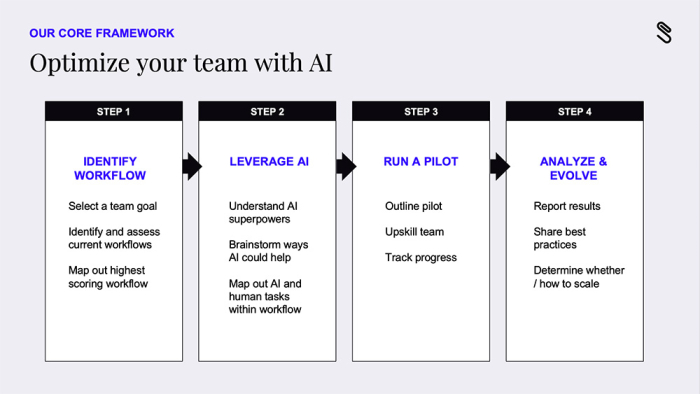

AI will have the most impact on workflows that are repeated more often, that take a lot of time and brainpower, or are important and could benefit from a second opinion.

He suggests scoring different workflows based on frequency, effort and importance.

In determining which workflows to tackle with AI, he suggests considering them against “generative AI superpowers”:

- Generate ideas.

- Generate content.

- Analyse content.

- Give feedback.

Finally, Slagen is emphatic that you need to put the best prompts and data you’ve got into your chosen AI tool.

“Garbage in, garbage out. Make sure you understand how to prompt and then review the output to make sure you are happy with the outputs and it is accurate.”

Deploying AI responsibly

The other interesting presentation was by a Kiwi.

Tim Sharp, founder of Sydney-based AI consultancy Gen8, emphasises the critical importance of “responsible AI” implementation:

- Deepfakes and synthetic media open tremendous potential for corporate impersonation attacks and disinformation to damage brands.

- Copyright infractions from tools that ingest proprietary text, images and audio data could spark an onslaught of lawsuits against AI companies.

- Sharp recommends using only “mature” AI products from reputable providers like Adobe's Firefly image generator, which takes pains to avoid ingesting copyrighted material.

He looked to create a virtual clone of himself but became uncomfortable with what would later happen if the company he used went under, changed its policies or was hacked.

In the end, he decided to create a simple digital avatar.

Sharp presented a great example from Hong Kong, where the financial controller for a listed company was called to a meeting of their senior leadership team (SLT) on Zoom.

All were present and the SLT debated buying a company, and then agreed to do so, and put the financial controller in charge of payment.

The person transferred $US25m to what turned out to be very clever fraudsters using deepfakes.

(For my final project, I am planning on repeating this with NZME. (Just kidding, Mr Boggs.))

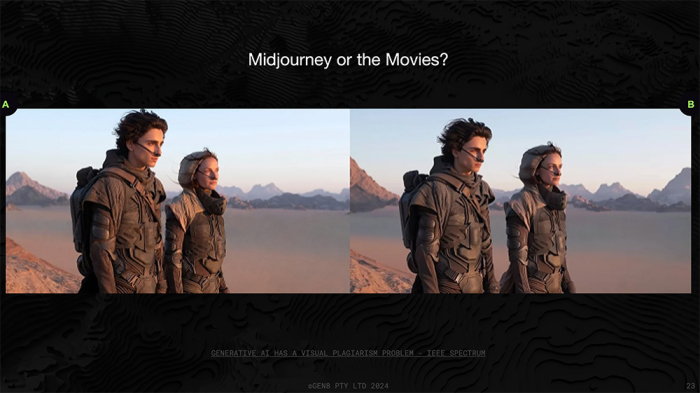

Sometimes it is almost impossible to tell what is real and what is fake.

“Generative AI has a visual plagiarism problem,” he says.

The shot, from MidJourney, on the right is fake. The one on the left is copyright to Warner Bros.

Still, there is a lot that can be done.

China and the US already have laws making deepfakes illegal without consent, and most platforms officially ban them.

Companies need to ask themselves: “Do we have a plan to identify and debunk impersonation attacks?”

At NZME, we see this constantly on Facebook, with spam ads leading to fake lookalike websites for the NZ Herald.

I complain about the ads to Facebook owner Meta each time I see them, and they’ve not once upheld a complaint.

This is true of media, but also of sharemarket-listed firms.

What happens if a deepfake video surfaces of your CEO revealing company “secrets” that have the potential to tank your share price?

Do you have a plan?

Sharp believes AI copyright infringement lawsuits will be one of the biggest stories of the next few years.

Firms like OpenAI started as non-profit academic endeavours, which gave them some rights to use publisher content.

But once they transitioned to for-profit firms, their use of copyrighted media was potentially no longer legal.

They were profiting off the copyrighted work.

When you start using a new AI tool, ask yourself whether the tool is really, really, mature and safe to use.

“Is there a better way? Of course there is.”

For example, in the legal world there is an AI tool called KL3M which is trained to give you copyright-safe document annotation for contracts, etc.

For images, Sharp says Adobe’s Firefly is the best option as it is trained on Adobe’s image library and photographers have been paid for their work.

Because the model was trained on fully licensed material you are not at risk of breaching copyright if you put this into an ad campaign.

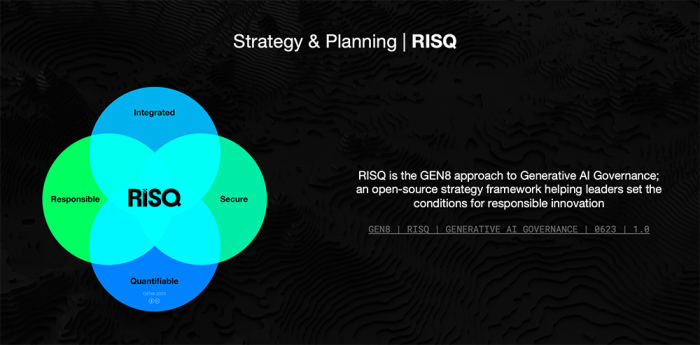

He has developed a free “RISQ” framework to help companies proactively evaluate the risks and rewards of AI use cases before deployment.

You can view that here.

He says the key is to use AI, but think through the risks and balance them with the benefits. See Tim's presentation here.

The series

Articles will be published each Friday:

- Week 0: Section's CEO, Greg Shove, on the impact AI will have.

- Week 1: How to get AI to work for you and your business.

- Week 2: How to use AI to make smarter strategic decisions.

- Week 3: See above.

- Week 4: Putting it all into practice, where I will learn to design “a habit loop with a cue, craving and response”.

Spark has funded 150 places on the $5,000 course to help participants chart an AI-supported vision for their own business.

This is editorial content – Spark is not paying for it – though it has paid for my course placement and is paying for advertising to promote these articles.